In today’s fast-paced digital world, staying ahead in technology is more important than ever. Technology Moment is your go-to source for the latest insights, trends, and advancements in artificial intelligence, machine learning, and deep learning. Whether you’re an AI enthusiast, a data scientist, or a tech professional looking to enhance your skills, we bring you well-researched, expert-driven content that keeps you informed and empowered.

In this blog, “Deep Learning Techniques – Improve Your Models Today,” we dive into essential strategies to enhance deep learning models, ensuring better accuracy, efficiency, and real-world application. From hyperparameter tuning to transfer learning and data augmentation, this guide will help you take your deep learning expertise to the next level. Let’s explore how to optimize your models for superior performance!

Deep learning has revolutionized the world of artificial intelligence (AI), enabling machines to recognize images, understand speech, and even generate human-like text. It’s the driving force behind breakthroughs in self-driving cars, medical image analysis, and advanced natural language processing (NLP) models like ChatGPT. However, while deep learning models have immense potential, they often require careful tuning and optimization to perform at their best.

Why Improving Deep Learning Models is Crucial

Building a deep learning model is not just about stacking multiple layers of neurons together and expecting great results. A poorly trained model can lead to:

- Overfitting – when a model memorizes training data but fails on new, unseen data.

- Slow Training Times – when the model takes too long to learn patterns.

- High Computational Costs – inefficient models require excessive GPU power and resources.

By applying the right deep learning techniques, you can improve accuracy, reduce training time, and make your model more efficient. Whether you’re working on computer vision, NLP, or predictive analytics, fine-tuning your deep learning approach can lead to significant performance gains.

What This Article Covers

In this article, we will explore essential techniques to enhance your deep learning models. We’ll cover:

- The key components of a deep learning model

- Advanced strategies like hyperparameter tuning, data augmentation, and transfer learning

- Methods to prevent overfitting and underfitting

- The future of deep learning optimization

Understanding the Basics of Deep Learning

Deep learning is one of the most revolutionary fields in artificial intelligence (AI), enabling machines to perform complex tasks that traditionally required human intelligence. But what exactly is deep learning, and how does it differ from traditional machine learning? Let’s dive into the fundamentals to build a strong foundation.

What is Deep Learning?

Deep learning is a subset of machine learning that uses artificial neural networks to mimic the way the human brain processes information. These neural networks have multiple layers—hence the term “deep”—which enable them to learn and extract patterns from large datasets.

Deep learning is responsible for many advancements in AI, such as:

- Self-driving cars that recognize pedestrians, traffic lights, and other vehicles

- Speech recognition systems like Siri and Google Assistant

- Medical diagnostics that detect diseases from X-rays and MRIs

- Recommendation systems that personalize your Netflix or YouTube experience

How Deep Learning Differs from Traditional Machine Learning

Machine learning and deep learning are closely related, but they are not the same. Here’s how they differ:

| Feature | Traditional Machine Learning | Deep Learning |

|---|---|---|

| Feature Engineering | Requires manual selection and tuning of features | Learns features automatically from data |

| Performance with Big Data | Struggles with large datasets | Performs exceptionally well with big data |

| Interpretability | More explainable and transparent | Often considered a “black box” |

| Computational Power | Works well on standard CPUs | Requires powerful GPUs/TPUs for training |

| Learning Method | Relies on algorithms like decision trees, SVM, and linear regression | Uses neural networks with multiple hidden layers |

In simpler terms, traditional machine learning requires human intervention to select the right features, whereas deep learning learns those features by itself, making it more powerful for complex tasks.

Common Applications of Deep Learning

Deep learning is widely used across various industries. Let’s explore some real-world applications:

1. Image and Object Recognition

Deep learning powers computer vision applications, enabling systems to detect faces, recognize objects, and even diagnose diseases in medical imaging. Examples include:

- Facial recognition in smartphones and security systems

- Autonomous vehicles detecting traffic signals and pedestrians

- Medical imaging for detecting tumors in MRI scans

2. Natural Language Processing (NLP)

Deep learning allows AI to understand and generate human language. It is the backbone of:

- Chatbots and virtual assistants like Alexa and Siri

- Language translation tools like Google Translate

- Text sentiment analysis for analyzing customer reviews

3. Speech Recognition

Deep learning enables AI to convert spoken words into text, which is used in:

- Voice commands for smart home devices

- Automatic transcription services

- Call center automation

4. Recommendation Systems

Streaming services and e-commerce platforms use deep learning to recommend products based on user behavior. Examples include:

- Netflix suggesting movies based on your watch history

- Amazon recommending products based on your shopping behavior

- Spotify creating personalized playlists

5. Robotics and Automation

Deep learning plays a critical role in robotics, enabling robots to:

- Perform complex tasks such as assembly line manufacturing

- Navigate autonomous drones and delivery robots

- Enhance prosthetic limb control through brain-machine interfaces

Table of Contents

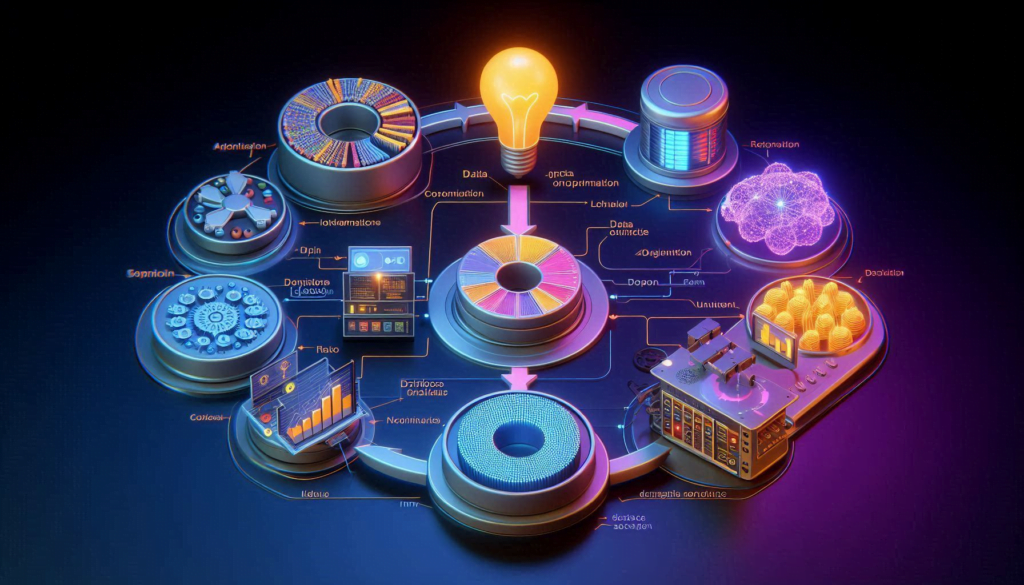

Key Components of a Deep Learning Model

Deep learning models are built using several key components, each playing a crucial role in learning and making predictions. Understanding these components is essential for optimizing model performance and achieving better accuracy. Let’s break down the fundamental elements of a deep learning model.

1. Neural Networks and Layers

At the core of any deep learning model is a neural network, which consists of multiple layers of interconnected neurons.

Types of Layers in a Neural Network:

- Input Layer: The number of neurons in the input layer corresponds to the number of features in the dataset.

- Hidden Layers: These layers contain neurons that process and transform the input data. The more hidden layers a network has, the deeper the learning process becomes.

- Output Layer: The final layer produces the result, which can be a classification label (e.g., cat vs. dog) or a numerical value (e.g., predicting house prices).

Each neuron in a layer receives inputs, applies weights, processes them through an activation function, and passes the output to the next layer.

2. Activation Functions

An activation function decides whether a neuron should be activated (pass information forward) based on the input received.

Common Activation Functions:

- ReLU (Rectified Linear Unit): Most widely used in deep learning; replaces negative values with zero to introduce non-linearity.

- Sigmoid: Converts values into a range between 0 and 1, often used in binary classification problems.

- Tanh (Hyperbolic Tangent): Similar to sigmoid but outputs values between -1 and 1, often used in RNNs.

- Softmax: Converts outputs into probability distributions, mainly used in multi-class classification problems.

Choosing the right activation function significantly impacts how well a deep learning model learns from data.

3. Loss Functions and Optimization

A loss function measures how well a deep learning model is performing by comparing predictions to actual values. The model’s goal is to minimize this loss by adjusting internal parameters (weights and biases).

Types of Loss Functions:

- Mean Squared Error (MSE): Used in regression tasks to measure the average squared difference between predicted and actual values.

- Cross-Entropy Loss: Commonly used in classification problems to measure the difference between predicted and actual probability distributions.

To optimize a model, deep learning uses optimizers like:

- Stochastic Gradient Descent (SGD): Updates weights based on a fraction of the training data, making it faster.

- Adam Optimizer: An adaptive optimizer that adjusts the learning rate dynamically for each parameter, widely used for its efficiency.

Loss functions and optimizers work together to ensure the model learns from its mistakes and improves over time.

4. Training and Validation

Once a deep learning model is built, it needs to be trained and validated to ensure it generalizes well to new data.

Steps in Model Training:

- The model receives training data as input.

- Predictions are made using initial random weights.

- The loss function calculates the error between predictions and actual values.

- The optimizer adjusts weights to reduce this error.

- The process repeats for multiple epochs until the model reaches satisfactory performance.

Importance of Validation:

- Training Set: Used to train the model.

- Validation Set: Used to fine-tune hyperparameters and avoid overfitting.

- Test Set: Used to evaluate the final performance of the model on unseen data.

Effective Deep Learning Techniques to Improve Model Performance

Deep learning models can be incredibly powerful, but achieving high accuracy and efficiency isn’t always straightforward. Without the right techniques, models may underperform, overfit, or require excessive computational resources. Here are some of the most effective deep learning techniques that can significantly improve model performance.

1. Choosing the Right Architecture

The structure of your deep learning model plays a vital role in its performance. Different tasks require different architectures.

- Convolutional Neural Networks (CNNs) – Best for image processing tasks like classification, object detection, and segmentation. CNNs use filters to capture spatial relationships in images.

- Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTMs) – Used for sequential data like speech recognition, text generation, and stock price predictions. These models retain information across time steps.

- Transformers (e.g., BERT, GPT-4, ViT) – Ideal for NLP tasks and now even used for image recognition.

Choosing the right architecture ensures that your model efficiently learns patterns from the data.

2. Hyperparameter Tuning

Hyperparameters define how the model learns.

- Learning Rate Optimization – If the learning rate is too high, the model may not converge. Techniques like learning rate scheduling and adaptive learning rates (Adam, RMSprop) help.

- Batch Size and Epoch Tuning – A smaller batch size provides more updates, improving generalization, while a larger batch size speeds up training but may lead to overfitting. Finding the right number of epochs prevents underfitting or overfitting.

- Grid Search vs. Random Search – Grid search systematically tests all hyperparameter combinations, while random search samples values randomly for faster optimization.

Proper hyperparameter tuning prevents model inefficiencies and ensures faster convergence.

3. Data Augmentation and Preprocessing

Data is the foundation of any deep learning model. More data usually leads to better performance, but collecting large datasets is expensive. Instead, data augmentation and preprocessing techniques help maximize existing data.

- Image Augmentation – Techniques like rotation, flipping, cropping, and adding noise help improve model robustness in image-related tasks.

- Text Preprocessing for NLP – Tokenization, stemming, lemmatization, and stop-word removal ensure better text understanding.

- Handling Imbalanced Datasets – Techniques like oversampling, undersampling, and synthetic data generation (SMOTE) balance datasets and improve classification accuracy.

Better data preparation leads to more reliable and generalized deep learning models.

4. Transfer Learning and Pretrained Models

Instead of training models from scratch, transfer learning allows you to leverage existing models trained on large datasets.

- How Transfer Learning Works – You take a pretrained model (e.g., ResNet, BERT, or GPT) and fine-tune it on your specific dataset.

- When to Use Pretrained Models – If you have limited data, a pretrained model provides a strong starting point.

- Best Sources for Pretrained Models – Platforms like TensorFlow Hub, Hugging Face, and PyTorch Hub provide state-of-the-art pretrained models.

Transfer learning saves time, improves accuracy, and reduces the need for large datasets.

5. Regularization Techniques

Regularization prevents overfitting, ensuring the model generalizes well to unseen data.

- Dropout – Randomly drops neurons during training to prevent over-reliance on specific features.

- Batch Normalization – Normalizes activations within layers to stabilize training and improve generalization.

- L1 and L2 Regularization – L1 helps in feature selection (lasso regression), while L2 prevents large weight values (ridge regression).

Regularization techniques enhance model stability and prevent overfitting.

6. Optimizing Model Training

Efficient training methods can significantly reduce computational costs while improving accuracy.

- Using GPUs and TPUs – Specialized hardware speeds up deep learning model training.

- Mini-Batch Gradient Descent – Instead of updating weights after every data point (SGD) or entire dataset (batch gradient descent), mini-batch gradient descent balances speed and stability.

- Parallel Processing and Distributed Training – Splitting tasks across multiple GPUs or cloud instances (e.g., TensorFlow’s Mirrored Strategy) speeds up training for large models.

Efficient training ensures that models learn faster without compromising accuracy.

7. Fine-Tuning for Better Performance

Fine-tuning involves making small adjustments to improve model accuracy.

- Adjusting Model Parameters – Tweaking learning rates, optimizer settings, and network depths can improve results.

- Unfreezing Layers in Transfer Learning – Instead of using only the top layers, gradually unfreeze deeper layers for domain-specific learning.

- Customizing Loss Functions – Some tasks require specialized loss functions like Focal Loss (for imbalanced data) or Hinge Loss (for SVMs).

Fine-tuning can squeeze out extra performance from a deep learning model.

8. Evaluating and Debugging Models

Understanding model behavior helps in diagnosing issues and making improvements.

- Common Deep Learning Evaluation Metrics – Accuracy, precision, recall, F1-score, and AUC-ROC for classification; MSE, RMSE, and MAE for regression.

- Identifying Overfitting and Underfitting – If training accuracy is high but validation accuracy is low, overfitting has occurred.

- Debugging Techniques – Tools like TensorBoard, SHAP, and LIME help visualize model decisions and detect errors.

Detailed evaluation ensures that models perform well on real-world data.

The Future of Deep Learning Optimization

Deep learning is advancing at an unprecedented pace, and optimizing models is becoming more crucial than ever. As researchers and engineers push the boundaries of artificial intelligence (AI), new methods and technologies are emerging to make deep learning models more efficient, accurate, and accessible. The future of deep learning optimization revolves around improving computational efficiency, reducing training time, and making models more adaptable to real-world applications. Let’s explore some of the key trends shaping the future of deep learning optimization.

1. Emerging Trends in Model Efficiency

One of the biggest challenges in deep learning is the increasing size and complexity of models. Large-scale models, such as GPT and BERT, require immense computational power and vast datasets. The future of deep learning optimization is focused on making these models more efficient without compromising performance.

a. Model Compression Techniques

To reduce computational costs and memory requirements, researchers are developing various model compression techniques, including:

- Pruning – Removing unnecessary neurons or layers to simplify the model.

- Quantization – Reducing the precision of model weights (e.g., converting 32-bit floats to 8-bit integers) to save storage and speed up inference.

- Knowledge Distillation – Training a smaller “student” model to mimic a larger “teacher” model while retaining most of its accuracy.

These techniques will help deploy deep learning models on edge devices like smartphones, IoT devices, and embedded systems.

b. Energy-Efficient Deep Learning

With the increasing environmental impact of AI training, the future will see a shift toward green AI—a focus on optimizing deep learning models to consume less energy. This includes:

- Adaptive computation – Allowing models to allocate computational power dynamically based on input complexity.

- Efficient architectures – Designing lightweight neural networks such as MobileNet and EfficientNet.

- Sparse models – Using sparsity in neural networks to reduce redundancy in computations.

These innovations will make AI more sustainable while maintaining high performance.

2. Role of Quantum Computing in Deep Learning

Quantum computing is expected to revolutionize deep learning optimization in the future. Traditional computers struggle with the growing complexity of deep learning models, but quantum computers can process vast amounts of data in parallel, making them ideal for AI workloads.

a. Faster Training and Optimization

Quantum algorithms, such as the Quantum Approximate Optimization Algorithm (QAOA) and Variational Quantum Eigensolver (VQE), could significantly speed up deep learning training by optimizing parameters more efficiently than classical methods.

b. Solving Complex Problems

Quantum computing has the potential to solve problems that are computationally infeasible for classical machines, such as:

- Optimizing hyperparameters with high-dimensional search spaces.

- Enhancing reinforcement learning models.

- Improving generative models like GANs for ultra-realistic image and text synthesis.

Although quantum computing is still in its early stages, integrating it with deep learning could be a game-changer in optimization.

3. Automation in Deep Learning Model Optimization

As deep learning models become more complex, the need for automation in model optimization is growing. Future advancements will focus on AutoML (Automated Machine Learning) and Neural Architecture Search (NAS) to reduce the manual effort required in building and fine-tuning models.

a. AutoML – Reducing the Need for Manual Tuning

AutoML platforms like Google’s AutoML, H2O.ai, and Microsoft Azure AutoML allow researchers to:

- Automate feature selection.

- Optimize hyperparameters.

- Select the best-performing neural network architecture.

This automation will make deep learning more accessible to non-experts and reduce the time required for model development.

b. Neural Architecture Search (NAS)

NAS is an AI-driven method that automatically designs deep learning architectures. Instead of manually trying different layer combinations, NAS uses reinforcement learning or evolutionary algorithms to find the optimal structure. Key benefits include:

- Discovering more efficient and high-performing models.

- Reducing reliance on human intuition for designing networks.

- Enhancing model adaptability to new tasks without extensive retraining.

With AutoML and NAS, the future of deep learning optimization will involve less manual work and more intelligent automation, allowing researchers to focus on high-level innovation rather than tedious parameter tuning.

4. Federated Learning and Decentralized AI

As concerns over data privacy and security grow, federated learning is emerging as a key trend in deep learning optimization. Instead of sending data to a centralized server for training, federated learning allows AI models to be trained locally on users’ devices while keeping data private.

a. Benefits of Federated Learning

- Enhanced privacy – Data never leaves the user’s device, reducing security risks.

- Lower bandwidth usage – Only model updates are shared, reducing network strain.

- Faster learning – Models continuously improve from real-world user interactions.

Big tech companies like Google and Apple are already using federated learning to optimize AI-driven applications such as predictive text and voice recognition.

b. Decentralized AI for Edge Computing

Decentralized AI will enable deep learning models to run efficiently on edge devices (e.g., smart cameras, IoT sensors) without requiring constant internet connectivity. This will improve:

- Real-time decision-making in healthcare, autonomous vehicles, and industrial automation.

- Security and privacy by reducing dependence on centralized data storage.

- Scalability by distributing AI workloads across multiple devices.

Federated learning and decentralized AI will play a significant role in making deep learning models more adaptable and secure in the coming years.

5. The Integration of Deep Learning with Other AI Technologies

Deep learning optimization will not exist in isolation—it will increasingly merge with other AI advancements such as:

- Explainable AI (XAI) – Developing models that are transparent and interpretable, helping users understand why an AI system makes certain decisions.

- Self-Supervised Learning – Reducing the need for labeled data, making AI more efficient in learning from raw data.

- Multimodal AI – Combining vision, speech, and text processing for more comprehensive AI applications (e.g., AI-powered assistants that understand both images and spoken language).

These integrations will drive the next wave of AI breakthroughs, making deep learning more powerful, versatile, and user-friendly.

Conclusion: Bringing It All Together

Deep learning is a powerful field that continues to revolutionize artificial intelligence, enabling breakthroughs in fields such as computer vision, natural language processing, healthcare, finance, and robotics. However, simply building a deep learning model is not enough—it must be continuously optimized and improved to achieve the best performance.

In this article, we explored various techniques to enhance deep learning models, including hyperparameter tuning, data augmentation, transfer learning, regularization, and fine-tuning strategies. By applying these methods effectively, you can significantly improve your model’s accuracy, efficiency, and generalization capabilities.

One of the key takeaways is that understanding your data and model architecture is just as important as tuning parameters. A well-structured dataset, proper preprocessing techniques, and choosing the right neural network design can dramatically impact performance. Moreover, avoiding overfitting and underfitting through regularization techniques ensures that your model generalizes well to new, unseen data.

Another crucial aspect is leveraging computational resources. Training deep learning models requires substantial computational power, and using GPUs, TPUs, or distributed training can accelerate the process while optimizing resource utilization. Additionally, utilizing pretrained models and transfer learning can save time and improve results, especially when working with limited datasets.

Deep learning is an ever-evolving field, and staying up to date with the latest advancements is essential. With innovations in quantum computing, automated machine learning (AutoML), and more efficient model architectures like transformers, the future of deep learning optimization is promising.

To sum up, improving deep learning models requires a combination of technical expertise, experimentation, and continuous learning. By applying these techniques and adapting to new advancements, you can push your models to new heights and create more impactful AI solutions.

Frequently Asked Questions (FAQs)

What is the best way to optimize a deep learning model?

There is no single “best” way to optimize a deep learning model since it depends on factors like the dataset, model architecture, and task. However, some proven techniques include:

- Hyperparameter tuning: Adjusting learning rate, batch size, and optimizer settings can significantly impact performance.

- Regularization techniques: Methods like dropout, L1/L2 regularization, and batch normalization help prevent overfitting.

- Data augmentation: Creating variations of the input data can enhance model generalization.

- Transfer learning: Leveraging pretrained models can save time and improve accuracy.

- Efficient training strategies: Using GPUs/TPUs, mini-batch gradient descent, and parallel processing speeds up training.

By systematically experimenting with these techniques, you can achieve better model performance.

How do I prevent overfitting in deep learning?

Overfitting happens when a model learns the training data too well but fails to generalize to new data. To prevent overfitting:

- Use dropout layers: Dropout randomly disables neurons during training, making the model more robust.

- Apply early stopping: Stop training when validation loss starts increasing to avoid unnecessary overfitting.

- Increase training data: More diverse training data reduces the likelihood of memorization.

- Use regularization techniques: L1 and L2 regularization prevent overly complex models.

- Cross-validation: Splitting data into multiple subsets and training on different portions improves generalization.

Combining these techniques ensures that your model performs well on real-world data.

Is transfer learning always beneficial?

Transfer learning can be incredibly useful, but it’s not always the best approach. It depends on:

- Dataset similarity: If your dataset is very different from the original dataset used to train a pretrained model, transfer learning may not help.

- Task complexity: For simpler tasks, training from scratch might be more efficient than fine-tuning a complex model.

- Computational resources: Transfer learning can save time and computational power, especially when using large models.

In most cases, transfer learning is a great way to boost performance, but always evaluate its effectiveness based on your specific use case.

What are some tools for deep learning model tuning?

Several tools and libraries can help optimize deep learning models:

- Grid Search & Random Search: Libraries like Scikit-Learn and Hyperopt automate hyperparameter tuning.

- TensorBoard: A visualization tool for monitoring training, loss curves, and performance metrics.

- Keras Tuner: A built-in library in TensorFlow for automated hyperparameter optimization.

- Weights & Biases: A tool for experiment tracking and hyperparameter optimization.

- Optuna: A flexible optimization framework for finding the best model parameters.

These tools make it easier to fine-tune your deep learning models and improve performance.

How does GPU acceleration help in deep learning?

Deep learning models require massive computations, and GPUs (Graphics Processing Units) accelerate training by:

- Parallel processing: GPUs process thousands of operations simultaneously, unlike CPUs.

- Faster matrix operations: Since deep learning heavily relies on matrix computations, GPUs significantly speed up training.

- Efficient backpropagation: Optimizers like Adam and SGD run much faster on GPUs.

- Handling larger models: GPUs allow training deeper neural networks that would be impractical on CPUs.

For even faster training, TPUs (Tensor Processing Units) and cloud-based solutions like Google Colab and AWS EC2 instances provide additional acceleration.